The API-fication of Work

The early winners in AI (aside from ChatGPT, the only consumer winner so far) all have one thing in common. They automate job functions that were previously done by people. This is true of Sierra, Cursor, Claude Code, Writer, Harvey, and others…

Understandably, this has created a lot of fear, uncertainty, and doubt. Some believe AI is coming for the jobs so fast that we won’t be able to adjust as a society. Others point to technology limitations and claim that these worries are overhyped.

The API-fication of Work

My point of view is that we’re going through what we should call the API-fication of Work.

AI doesn't replace entire jobs. It replaces specific functions within jobs. A software engineer's job includes writing code, reviewing PRs, mentoring junior talent, and architecting systems. AI can already do the first two. The last two are resistant (more on that below).

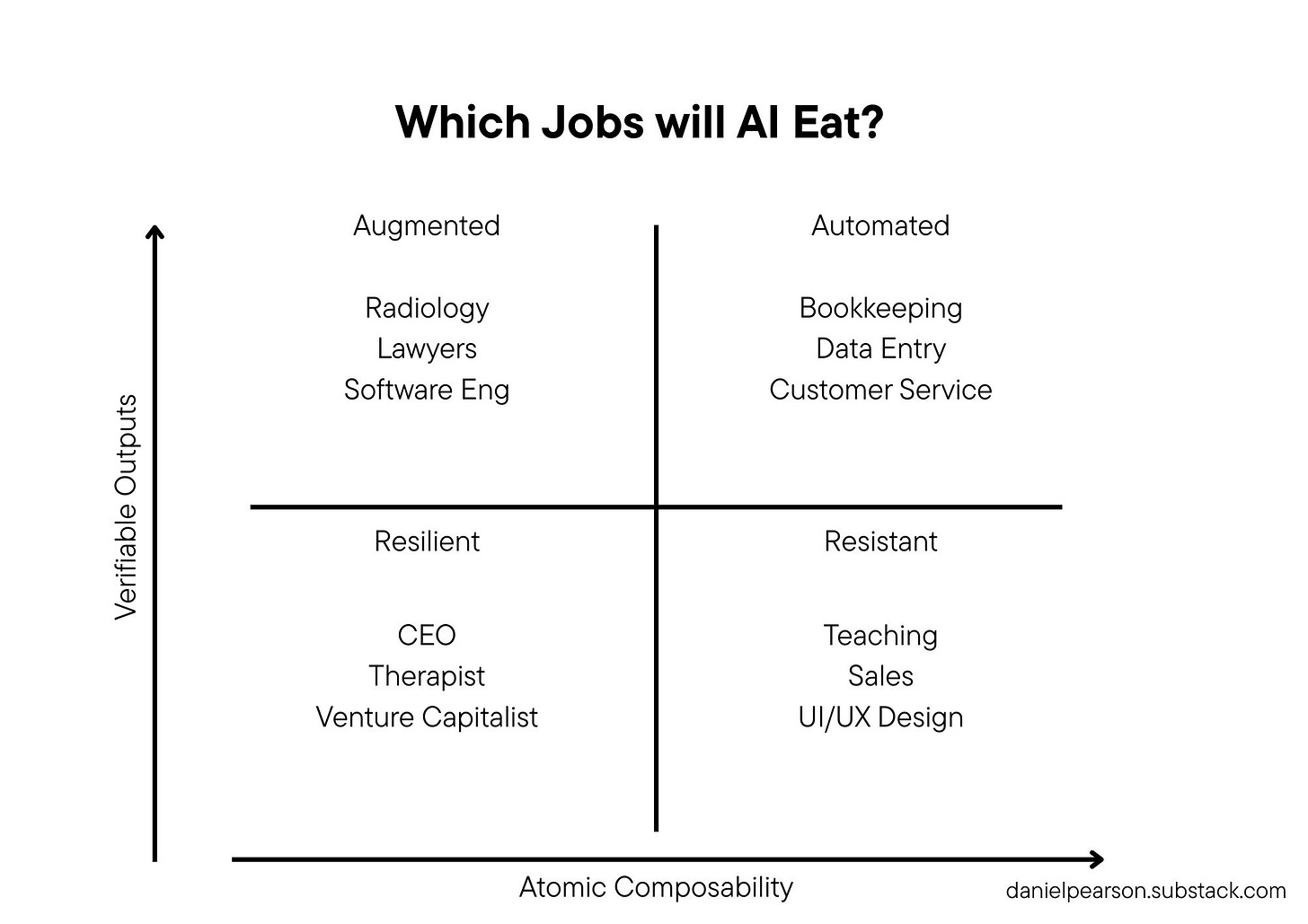

Software is composable - built from discrete functions with defined inputs, outputs, and tests for success. AI consumes functions within human jobs the same way: it needs atomic tasks with verifiable results. In the case of replacing human job functions, verifiable results mean successes that humans can agree as successes. The more that a job function resembles an API (predictable request/response patterns with clear success metrics that humans can agree on), the faster AI will eat it.

Two Composability Requirements for AI and Why Some Jobs Are More Resistant

First, the job function must have atomic composability. It must decompose into discrete functions. Job functions like categorizeExpense(), generateContract(), answerSupportTicket() are each atomic and independent.

Second, success of the function must be verifiable. The code compiles or doesn't. The books balance or they don't. The customer issue closes or doesn't. Without agreement on what "correct" looks like, AI can't be trusted.

This is why I believe some jobs will resist AI, at least in “whole job” sense. Therapists can't decompose "build trust" into pure functions. A CEO can't API-fy "read the room". A creative director has no unit test for their taste. These jobs will resist AI for longer because they're stateful, context-heavy, and success is subjectively judged by humans who can't articulate the evaluation criteria.

The Assembly Line for Knowledge Work

Just like we spent the last century turning craftsmanship into assembly lines so humans could be more productive and so consumers could have cheaper goods, I think we may be entering a sort of assembly line era for knowledge work. The result will likely be more productive knowledge workers and cheaper services.

There is so much value to be captured in the API-fication of job functions that businesses will be incentivized to execute on a “forced decomposition” of jobs. They won’t do this because it improves the jobs for humans, but because it makes the job functions more AI-readable and automatable.

A “forced decomposition” example from my world (digital marketing) could look something like 'Manage Facebook ads' becoming something like 50 micro-functions: validateBrandCompliance(), predictCreativeFatigue(), selectNextTest(). Each function gets its own AI, its own success metrics. The media buyer who currently orchestrates campaigns could become a reviewer of exception reports, deciding on only the 5% of decisions the AIs can't handle with confidence.

Are we about to spend the next decades turning knowledge work into APIs so AI can eat it? What will we lose when every job function requires a unit test?